Load Balancing Between Instances

Context

Microservices are being adopted. Microservices can be deployed with a configurable amount of instances. There are mechanisms to discover service instances. There is request-response based communication with the microservice.

Problem

- The dynamic deployment environment sometimes leads to communication errors since some microservice instance was relocated or teared down

- There is an uneven load distribution between the instances of a microservices. In an extreme case all requests just go to one microservice.

Solution

Use a (sever-side) load balancing mechanism to distribute the load between (healthy) microservice instances.

Using a load balancer is already a well established pattern in microservice literature. However, we found there are different ways to do load balancing:

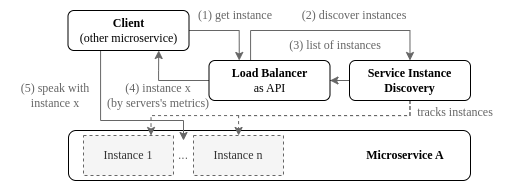

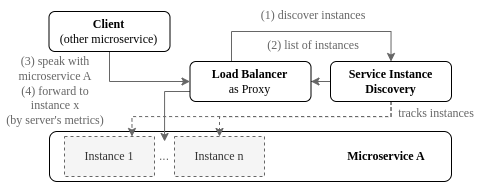

A server-side load balancer (or external load balancer) requires no knowledge on the client-side. Instead of the microservice instances, the load balancer component becomes the point of access to the microservice for clients. The load balancer either returns the address of a suiting microservice instance to the client that they can establish communication with (Figure 1), or act as a proxy and forward the traffic directly to the instance (Figure 2). The load balancing is transparent for the clients.

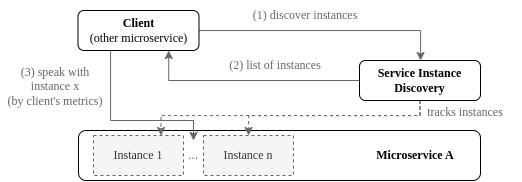

A client-side load balancer (or internal load balancer) requires knowledge on the client-side. The interaction with a microservice becomes a 2-step process (Figure 3) where a client first fetches all available instances from the service instance discovery component. Second, the client then selects the microservice instance based on its own criteria (as choosing the lowest response time) to communicate with. We classify such implementations as service instance discovery rather than as load balancing.

In case you facilitate publish-subscribe based communication with the microservice, consider using a message broker to balance load between instances.

The load distribution is required in order to build a scalable and elastic system, and contributes to fault tolerance and high availability. Additionally, load balancers enable zero-downtime deployment techniques like canary releases and instant rollbacks by routing between different deployed versions of a microservice.

Maturity

Proposed, requires evaluation.

Sources of Evidence

L3:

- Microservices need service discovery and load balancing in order to be fully functional

- Chose Ribbon as load balancer (in combination with Eureka for discovery, Hysterix as load balancer, Zuul as edge server)

- Tech selection: integration with used framework (Spring), and other components (Netflix OSS)

- Helps to make system more resilient during service calls

- Migration patterns: internal and external load balancer

L5:

- Part of category "Communication/Integration"

- = workload intensity distribution

- Need for further research on load distribution (for multi cloud deployment with containers)

L8:

- Use sidecars to monitor and manage more fine-grained control over load balancing and other factors

L9:

- Example where load balancing with starting more instances led to increased performance (authentication time)

L12:

- scalability requires distributing load on individual instances

- load balancer does the job

- get available instances from service discovery

- internal load balancer per microservice (at least logically, not sure if really one load balancer per service)

- not use as external load balancer as well

- Ribbon as part of Netflix OSS project

- can serve as internal load balancer

- HAProxy as alternative

- load balancer used in combination with service discovery and circuit breaker

L13:

- router fabric to get requests to specific instances of microservice

- often does load balancing as well

- as well as isolating instances in failed state (=> circuit breaker)

L15:

- serverless platforms do load balancing out of the box

- often round robbin used

- required for horizontal scaling: did some experiments

- in low stress warm service invocation, load distribution was uneven across hosts

L16:

- circuit breaker pattern works perfectly with load balancer pattern

- load balancer to only balance to heathy nodes (known by circuit breaker)

- where circuit half open: lower number of requests

- broken service not used for the time being

- plays role in deployment scenarios migrating to new version

- one services deployed the load balancer triggered to reroute to new version

- allows zero-downtime

- same in version rollbacks

L21:

- load balancing for scalability reasons

- comparison to monolith: only balancing external requests

- microservices need to balance internal and external ones

- (+) far more uniform load balancing

- only balancing external requests may not be enough

L23:

- load balancer as example for what is being combined in cloud applications

L25:

- API gateway can serve as load balancers since already nows location and addresses of all services

- service discovery can serve as load balancer (client-side load balancing algorithm to choose comm. partner)

L30:

- utilizing container and infrastructure as k8s

- rolling updates, automated scaling, rebalancing in case of node failure

- results in much more dynamic and volatile environment that traditional deployments

L31:

- sketch to use internal load balancer

- get instances from service registry, choose one of them (=> client-side)

- Migration Pattern: Introduce internal load balancer

- Reuse situation: scalability, high availability, dynamicity

- Context: service registry in place, multiple instances, services be clients of other services

- Problem

- How to base load on client needs/conditions

- How to balance nodes without setting up external load balancer

- Solution

- Each service (as a client) have internal load balancer

- fetch list of available instances from registry

- internal load balancer to balance load using local metrics (e.g. response time)

- Each service (as a client) have internal load balancer

- (+) Removes burden of setting up external load balancer

- (+) Client-specific load balancing mechanism, multiple might exist

- (-) create internal load balancer for different languages that integrates with registry

- (-) load balancing is not centralized

- Technology: Ribbon, for Java, works well with Eureka as service registry

- Migration Pattern: Introduce external load balancer

- Reuse situation: scalability, high availability, dynamicity

- Context: service registry in place, multiple instances ,services be clients of other services

- Problem

- How to balance load with at least client code changes?

- How to have centralized load balancing approach for all services?

- Solution

- One external load balancer

- Uses service registry

- centralized algorighm for load balancing

- Either as proxy (not recommended), or as instance address locator

- Or use registry that also does load balancer

- One external load balancer

- (-) local metrics (e.g. response time) cannot be used

- (-) no individual client needs fulfilled

- (-) if as proxy: high availability load balancing cluster required

- Technology: Amazon Elastic Load Balancing, Nginx, HAProxy, Eureka

- Example (case study) takes internal load balancer for systematic load balancing

- 2 of 3 cases have internal

- 2 of 3 cases have external

- 1 of 3 cases seems to have both

L34:

- load balancing => resilience to failure

- keyword in class performance => contributes to overall system performance

L43:

- volatility considerations lead to challenges in reliability

- managed and reduced by mechanisms as load balancing

L44:

- Example system with load balancing mechanism per service (at least logically, not sure if also deployed)

- Speaking of a load balancing layer

L45:

- As future work to extend their architecture model with components as load balancing (probably means external?)

L49:

- Programmable infrastructure to manage complexity

- e.g. load balancing

L52:

- Example system with load balancing mechanism per service (at least logically, not sure if also deployed)

- Same as in gs44

L53:

- API gateway to be load balancing proxy for web services

- Message broker with MQTT has similarities

- Load balancing by binding multiple consumers to the same event channel

- Name resolution policy can be used for load balancing

L55:

- API gateway with Ribbon

- Does load balancing in combination with service registry

- Also does circuit breaking

L58:

- Example for easy version migration via load balancer switching

- only very little downtime

- Can also operate both versions in parallel to keep possibility of rollback open

- Helps to do heavy refactoring with difficult schema migration challenges

L59:

- Load balancers for horizontal scaling

L61:

- load balancing as one of microservice challenges

- Load balancer as found design pattern

- As "infrastructure service" -> "system level management" prominent growth in last years

- Attracting strongest attention of researchers

L63:

- Context: MiCADO prototype

- realizes load balancing among Data node services via HAProxy

- farm of nodes shares incoming traffic and spreads between Data nodes

- ensures balanced load

- current solution: round robin => but other techniques could be used: least connections or predictive techniques

- layer transparent for users

- additional features as caching, HTTP compression, SSL offloading

- HAProxy: high performance, reliability, well documented features

- HAProxy can become bottleneck => same scalability mechanism as for Data node (collect metrics, start /stop instances)

- Prometheus collects infos

- Alert Executor can instruct Occopus to start/stop instances

- New ones registered with Consul

LN21:

- Containers can be managed by clusters doing

- among others: service discovery, service registry, load balancing, config management

- Technologies: Spring Cloud, Mesos, Kubernetes, Docker Swarm

LN43:

- Docker => Kubernetes => horizontal scaling, service discovery, load balancing

LN48:

- HAProxy as load balancer with round robin to distribute load on different instances

- Component view over system that has load balancer + API gateway as guard to the system taking requests from outside

LM43:

- Context: SLR findings about microservices in DevOps

- S21 strategies for load balancing: Traefik, HTTP reverse Proxy, round-robin

- 2 studies reported about the load balancer pattern

- Table 10 lists internal and external load balancer

- Load balancer pattern borrowed from monoliths and SOA

- can accomodate increasing load on services

LM47:

- Context: SLR with tactics to achieve quality attributes

- Load balancing

- Motivation: high load on one instance decreases overall system performance

- distribute load among instances of a microservice to maximize speed and capacity utilization

- types:

- centralized load balancing: most common

- centralized load balancer between client and services

- distributes load across multiple services

- algos: round robin, JSQ, JiQ, hybrid scheduling, adaptiv SQLB

- transparent to clients

- checks health of service pool underneath

- Tech: Nginx, HA Proxy

- distributed load balancing: on the upswing

- set of schedulers or implemented by the client

- get list of available servers, then distribute requests according algo

- algos: FCFS, random

- lower storage requirement than centralized: no need to store state of push to client-side

- but waste of bandwith as clients ask for instances frequently

- coordination among clients => complexity

- centralized load balancing: most common

LM48:

- Context: microservice migration describes an examples project (FX Core) and compares back to monolith

- Load balanacing is critical for service discovery to ensure load is balanced across replicas

- can be done via DNS to resolve hostname

- or via look-up for different replica IPs each time

- via dispatcher service (scheduler)

- or appointing clients to decide which replica to use

- typically part for service discovery or orchestration

- for distributing messages and events

- Load balancing implemented by DockerSwarm and by RabbitMQ

- Swarm uses built-in service discovery for load balancing between container replicas

- round robin fashin

- one could consider proximity or load as criterion to distribute load

- Fig 6

- For active/active failover mode (multiple parallel active instances)

- Orchestration (eg Kubernetes, Mesosphere Marathon, Docker Swarm) also does load balancing (among other features)

Interview A:

- Kubernetes does load balancing for them

Interview B:

- Need for load balancing (and service discovery, resilience patterns) since not fix wiring between services possible

- Where should that be? Gateway? Kubernetes? Service Meshes?

- Example for load balancing with round robbin